Data modeling for systems analysis

Data modeling is a process of designing and developing a data system by taking all the information that would be needed to support the various business processes of the oraganisation (Ponnaih). It is created to describe the structure of the data handled in information systems and persisted in database management systems. That structure is often represented in entity-relationship diagrams or UML class diagrams (Unified Modeling Language is an object oriented software engineering used to model an application Structures, behaviours and business processes) (Merson, Paulo 2009). It includes the formalization and documentation of existing processes and events that occur during application software design and development. Data modeling techniques and tools capture and translate complex system designs into easily understood representations of the data flows and processes, creating a blueprint for construction and/or re-engineering.

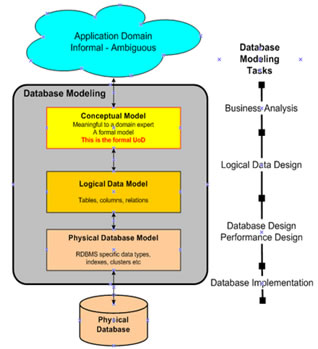

Figure: 1- Thought process behind the data modeling[3]

Database is an important asset of business. To design, use and maintain such important asset many people are involved. System analysts determine the requirements of the database users to create a solution for their business need, and focus on non-technical and technical aspects (Itl Education Solutions Limited). The non-technical aspects involve defining system requirements, facilitating interaction between business users and technical staff, etc. Technical aspects involve developing the specification for user interface (application programs), Application programmers are the computer professionals who implement the specifications given by the system analysts, and develop application programs.

Figure: 2- Basic flow control on the data modeling[3]

Types of data modeling

From the actual database to be used for the information systems, the types of data modeling are expalines as Conceptual model (technology-independent model), Enterprise data model, Logical model (Tables, columns, relations), Physical model (MySQL, Oracle or Microsoft SQL Server) (Simsion, Graeme, Graham, 2005) .

Figure:3 Defines Three types of data modeling

Conceptual Data Modeling - The Conceptual model helps designers capture the real data requirements (Graeme Simsion). A conceptual data model is a model of the things in the business and the relationships among them, rather than a model of the data about those things (Matthew West, 2011). It identifies relationships between different entities and it is a high-level model. It is the first step of the top-down Database Development Process. It is a detailed model that captures the overall structure of data in an organization. The goal of Conceptual Data Modeling is to develop an entity-relationship model that represents the information requirements of the business. Its main purpose is to model the functional and information needs of a business.

Figure:4- Conceptual Data Modeling[5]

Enterprise Data Modeling – An enterprise data model (also called as corporate data model) is a model that covers the whole of, or a substantial part of, an organization (Simsion, Graeme, Graham, 2005). It is very similar to conceptual data modeling, but it differs in addresses and its unique requirements of a specific business. The development of common consistent view and understanding of data elements and their relationships across the enterprise is referred to as Enterprise Data Modeling. This type of data modeling provides access to information scattered throughout an enterprise under the control of different isolated departments with unique databases and data models. Enterprise Data Modeling is sometimes called as global business model and the entire information about the enterprise would be captured in the form of entities.

Figure:5 Enterprise Data Modeling

The top 3 levels (ESAM, ECM & EDOM) are the Enterprise or Conceptual layer in the Master data management methodology (MDM Methodology) which is a process that aims to standardize data and eliminate errors (Murphy, david, 2008). The ESAM (Enterprise Subject Area Model) includes the Business Subject Areas and their sub layers. The ECM (Enterprise Conceptual Model) is the Entity Relationship Diagram at the Enterprise Layer which includes the Business Entities and related business rules. EDOM (Enterprise Data Object Model) is the MDM area which includes the Base Data Elements and Base Entities. The bottom three levels are the Project layer including the LDM (Logical Data Model) which is a relational presentation of the Business Function. The LDM is defined by selecting the Business Function from a subset of the ECM, expanding the entities and relationships to include the business functions being addressed in the project and adding the Data Elements expanded to their business rule usage. The LTM (Logical transformational model) is the relational transformation to the target DBMS for the project including any performance enhancements. The PM (Physical Model) is the actual DBMS coding to implement the project.

Logical Data Modeling – is a tailored type of database management system which represents the specific entities, attributes and relationships involved in a business function (Andy Oppel, 2009). It is a basis for the creation of the physical data model. A logical model contains representations of entities and attributes, relationships, unique identifiers (Primary key), subtypes and super types, and constraints between relationships. A logical model can also contain domain model objects, or reference one or more domain or glossary models. After logical objects and relationships are defined in a logical data model, you can use the workbench to transform the logical model into a database-specific physical representation in the form of a physical data model.

Figure:6 Logical Data Modeling [5]

Physical Data Modeling - represents an application and database-specific implementation of a logical data model. It includes all the required tables, columns, relationships, database properties for the physical implementation of databases. Database performance, indexing strategy, physical storage and de-normalization are important parameters of a physical model. Physical data modeling has some tasks that are to be performed in an iterative manner such as identifying tables, normalize tables, identifying columns, identifying stored procedures, applying naming conventions, identifying relationships, applying data model patterns and assigning keys (agilemodeling.com).

Figure:7 Physical Data Modeling [5]

How Data Models Fit into Application Development?

Organizations undertake application development methodologies in order to develop application systems (Andy Oppel, 2009).

The Process oriented methodology which is a traditional application development includes typical process steps of collecting all the screens, reports, and interface files from the process design, isolating the data elements (attributes), and creating an initial logical model by using the normalization process. Normalization is a technique for producing a set of relations that possesses a certain set of properties. A typical criticism of this model is the water fall effect where each step depends on the previous steps that are difficult and expensive to go back if errors are discovered. While data modelling being essentially complete by the time construction of the application programs begins. Models must be flexible enough to control the inevitable changes that occur during the development process.

The Data-oriented methodologies were popular in the late 1970’s where data modelers study the data and use the data requirements to infer the processing requirements.

Hybrid methodologies, also known as parallel or blended methodologies, call for development of process models and data models in parallel. This has the criticism that it requires additional effort.

Object-oriented methodologies follows principles that naturally include data design and process design as objects are composed of variables (attributes) and methods (processes) that must be designed to operate together as a single unit. Most object modelers prefer Unified Modeling Language (UML) diagrams over the types of data models.

Prototyping Methodologies evolved from the notation that the comprehensive documentation of a system’s requirements and development were unnecessary. These methodologies had project teams learn expensive lessons.

Agile methodologies are iterative and incremental which provides opportunities to assess the direction of a project throughout the development lifecycle. This is achieved through regular modulation of work, known as sprints or iterations, at the end of which teams must present a potentially shippable product increment.

Data Modeling Life Cycle

Data modeling is usually conducted relatively early in a systems development project. The life cycle of a database encompasses all the events that occur from the time you first recognize the need for a database, through its development and deployment, and finally ending with the day the database is retired from service.

The traditional method for developing computer systems follows a process called the system development life cycle (SDLC). It includes:

Planning: Feasibility study is usually launched to determine whether the project can be reasonably expected to achieve the objective and whether preliminary estimates of time, staff, and materials required for the project fit within the required timeframe and available budget.

Requirements gathering: The project team must gather and document a high-level, yet precise, description of what the project is to accomplish. The focus must be on what rather than how; the how is developed during the subsequent design phases.

Conceptual design: The conceptual design phase involves designing the externals of the applications and databases. In fact, many methodologies use the term external design for this project phase. The layout of reports, screens, forms, web pages, and other data entry and presentation vehicles are finalized during this phase

Logical design: During logical design, the bulk of the technical design of the applications and databases included in the project is carried out. Many methodologies call this phase internal design, because it involves the design of the internals of the project that the business users will never see.

Physical design: During the physical design phase, the logical design is mapped or converted to the actual hardware and systems software that will be used to implement the applications and databases.

Construction During the construction phase, the application developers code and test the individual programming units. Tested program units are promoted to a system test environment, where the entire application and database system is assembled and tested from end to end.

Implementation and roll out Implementation: It is the process of installing the new application system’s components (application programs, forms or web pages, reports, database objects, and so on) into the live system and carrying out any required data conversions. Rollout is the process of introducing groups of business users to the new application.

Ongoing support Once a new application system and database have been implemented in a production environment, support of the application is often turned over to a production support team. This team must be prepared to isolate and respond to any issues that may arise, which could include performance issues, abnormal or unexpected results, complete failures, or the inevitable requests for enhancements.

Challenges of Data Modelling in the Real World:

Understanding of practicalities in real business environment is important to build a proper data model.

The first challenge is to ensure that the project plan allows for the development and proper use of high quality data models.

The second challenge is to actually develop these models – specifically, to develop a series of deliverables that will be in a physical data model and provide sufficient information for other participants in the project to carry out their work.

Thirdly, maintaining the data model as the change during its development are inevitable due to stakeholders’ increasing understanding of the implications of the system proposed may prompt them to suggest changes to the data structures originally discussed (Simsion, Graeme, Graham, 2005).

What makes a good data model?

Design a model that is neatly presenting the required data in the least possible number of groups and tables which is flexible enough to adapt to new changes without forcing the programmer to re-write the code. Maintaining and recording every piece of information required for a system is very important. Data should be recorded only once to prevent repetition which results in inconsistency and increased storage requirements (Daniel L). Business rules should be followed in collecting the data. Most importantly, a model should present the data in a manner understandable to all stakeholders.

Data modeling tools available in market: In today‘s increasingly heterogeneous environment – particularly with the advent of cloud computing – it is important that data modeling tools have some general characteristics. They should: 1) be flexible and have the ability to interchange with other tools 2) include a strong set of documentation and online resources, and 3) feature an intuitive, well-designed user interface (Craig Boyd, 2011). Business models and data models constantly change. The data modeling tools help solve the dynamic of business needs. DBWrench, Oracle designer, Data architect, database visual architect, power designer, ERstudio, Microsoft Visio are some of the data modeling tools in the market.

Summary:

Data modeling is usually done at a system or a company level, but a new effort is under way to define data models for whole industries (Chmielewski, Christy and Allen).

Data modeling must be executed with proper planning and analysis. Proper planning sets the goals of the database, explains why the goals are important, and sets out the path by which the goals will be accomplished. Analysis involves determining the requirements of the database. This is typically done by examining right documentation.

The goal is to offer a self-service type of environment which allows business users easy access with acceptable Response times. Response times also include the time required between the conception of a new application and the delivery of that application. What data model do you employ to provide ease of use for the business user while still being able to address current and future needs of the data warehouse in terms of updating, expansion, availability and management.

A data model is a plan for building a database, which is effective, it must be simple enough to communicate to the end user the data structure required by the database yet detailed enough for the database design to use to create the physical structure. Data modeling is a bottom up process. A basic model, which represents entities and relationships, is the first development. Then detail is added to the model by including information about attributes and business rules. An effective data model completely and accurately represents the data requirements of the end users. It is simple enough to be understood by the end user yet detailed enough to be used by a database designer to build the database. The model eliminates redundant data, it is independent of any hardware and software constraints, and can be adapted to change requirements with a minimum effort.

1. Ponniah, Paulraj. Data Modeling Fundamentals: A Practical Guide for ITProfessionals. New York: Wiley-Interscience, 2007.

2.

Itl Education Solutions Limited. Introduction to Database systems, India: Pearson.

3. Srinivasulu Uppari,

http://biappsdwh.blogspot.com/2013/01/part-1-data-modeling-overview.html

4.

The data model resource book, Atlanta: NewsRx, 2009.

5. http://www.1keydata.com/datawarehousing/data-modelling-levels.html.

6.

Simsion, Graeme C. Witt, Graham C, Data modelling Essentials, Boston: Morgan Kaufmann,2005.

7.

Matthew West, 2011, www.tdan.com/view-articles/15402.

8.

Xinyu geng, Xiaolei Feng, Xiaoyan Huang, A research on data modelling tools based on the dynamic enterprise modeling technology, China, ICCIS, 2010.

9.

Graeme Simsion, Data modeling theory and practice.

10. Murphy, David. Master Data Management, UK, Haymarket Business Publications Ltd, 2008: 1.

11. Learn Data Modeling. 2005 http://www.learndatamodeling.com

12.

Merson, Paulo F. “Data Model as an Architectural View”. Carnegie Mellon University (2009).

13. http://creately.com/blog/diagrams/uml-diagram-types-examples

14. http://www.agiledata.org/essays/dataModeling101.html

15. http://docs.oracle.com/cd/E15276_01/doc.20/e13677/data_modeling.htm

16. Teorey, Toby J, Data Modeling and design, Boston: Morgan Kaufmann, 2005.

17.

Dion, Pierre-Jean, Data Modeling Defines Business Domains, 2007.

18.

Simsion, Graeme. Data Modeling description or design?, Information &management, 2012

19. Andy Oppel, Data Modeling, New York, McGraw-Hill, 2009.

20.CraigBoyd, http://www.information-management.com/media/pdfs/MySoftForge.pdf, 2011.

21.

Data modeling, Chmielewski, Christy; Allen, Leilani, (Apr 1997): 85,88.

22. Daniel; L. Moody, http://library.riphah.edu.pk/acm/disk_1/text/2-1/er94/ER94-P094.pdf

23.http://www.agilemodeling.com/artifacts/physicalDataModel.htm